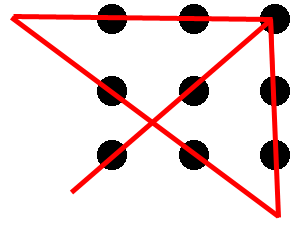

Four-card problem

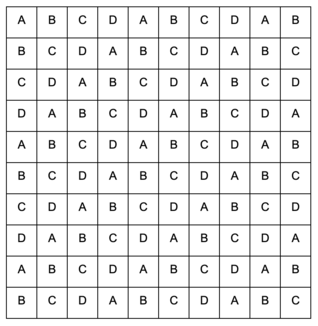

4 playing cards are placed in front of you. Two are face-down, with one red back and one black back. Two are face-up, with one ace and one queen.

Which cards must you turn over to check that every ace has a black back?

You need to turn over the ace and the red-backed card:

- If the ace doesn’t have a black back, the rule is broken.

- If the red-backed card is an ace, the rule is also broken.

Note that both the face-down card with a black back and the queen can have anything on the other side without violating the rule.

Commentary

This is also known as the Wason selection task.

Five-card trick

A magician and their assistant perform a trick with a shuffled deck of cards. The assistant asks a member of the audience to draw 5 cards at random from the deck while the magician is blindfolded. The audience member hands the 5 cards to the assistant who examines the cards, hands one of them back to the audience member, arranges the remaining four cards and places them face down into a neatly stacked pile on a table.

The audience member is then allowed to move the pile on the table or change its orientation without disturbing the order of the cards in the pile. This is to ensure that the assistant can’t control the position of the pile.

The magician now removes their blindfold, examines the four cards on the table and determines the card held by the audience member from inspecting the four card pile.

Playing cards

How was this trick performed if the magician used an ordinary 52-card deck?

Out of the 5 drawn cards, the assistant chooses 2 cards which have the same suit.

Represent the value of the cards as their face value, with Ace=1, Jack=11, Queen=12, King=13.

Call the difference between the values of the two cards \(d\). Out of the two chosen cards, if the \(d > 6\) then select the highest value card; otherwise select the lowest value card.

Pass the selected card back to the audience. Put the other card down on the table - this will form the bottom of the pile for the magician.

Use the 3 remaining cards to encode a value, \(p\). If \(d \le 6\) then set \(p = d\), otherwise set \(p = 13 - d\). Place the 3 cards on the pile.

The magician can now look at the pile. From the bottom card in the pile the magician knows the suit of the selected card.

Let the value of the bottom card be \(v\). From looking at the order of the 3 top cards the magician can determine \(p\). If \(p + v < 13\) then the value of the selected card is \(p + v\) otherwise it is \(p + v - 13\).

Proof

It will always be the case there there are 2 cards of the same suit in the 5 drawn cards because there are only 4 suits (by the pigeon-hole principle).

Call the values of the 2 cards with the same suit \(v_1\) and \(v_2\) such that \(v_1 < v_2\). It is the case that either \(v_1 + p = v_2\) or \(v_2 + p \equiv v_1 \pmod{13}\) for some \(0 < p \le 6\).

If it was the case that \(v_1 + p = v_2 \text{ s.t. } 6 < p < 13\) then we could write it equivalently as \(v_1 + 13 - p \equiv v_2 \pmod{13} \text{ s.t. } 0 < 13 - p \le 6\).

Let \(d = v_2 - v_1\) (i.e. the difference between the values). If \(d \le 6\) then \(p = d\), otherwise \(p = 13 - d\) as in the description of the trick.

There are 6 possible values for \(p\): 1, 2, 3, 4, 5 and 6. We can encode \(3! = 6\) numbers by controlling the order of 3 cards. Thus we are able to encode \(p\) with the order of our three remaining cards.

Extended case

What is the maximum number of distinct cards the magician could perform this trick with?

The maximum size deck the trick can be performed with is 124.

In the general case the audience member draws \(n\) cards, we select one, and use the other \(n - 1\) cards to tell the magician the selected card. Here the maximum sized deck is \(n! + n - 1\) cards.

Algorithm

We will use the following definitions:

- Let the size of the deck be \(N = n! + n - 1\).

- Let the cards in the deck be represented by numbers from \(0\) to \(N - 1\).

- Let \(c_i\) be the values of the cards drawn by the audience member such that \(0 \le i < n \text{ and } c_i < c_{i+1}\).

- Let \(c_i^\prime\) be the values of the cards seen by the magician such that \(0 \le i < n - 1 \text{ and } c_i^\prime < c_{i+1}^\prime\).

- Let \(s = \left(\sum c_i\right) \mod n\).

- Let \(s^\prime = \left(\sum c_i^\prime\right) \mod n\).

- Let \(p = \left\lfloor \frac{c_s - s}{n} \right\rfloor\).

Choose \(c_s\) as the selected card.

Encode \(p\) as a permutation of the \(n - 1\) remaining cards, and set them in an pile.

The magician then looks at the pile and calculates \(p\) from the permutation of the cards in the pile and \(s^\prime\) from the sum of the cards in the pile. From that the magician calculates the value:

\[\chi = p n + (-s^\prime \mod n)\]The magician then can claim that \(c_s\) is the \(\chi\)th card which is not in the pile (not in \(c_i^\prime\)). Formally:

\[c_s = \chi + k \text{ where } c_{k-1}^\prime < \chi + k < c_{k}^\prime \text{ and } 0 \le k < n - 1 \label{card_trick:c_s_calc}\]Proof of correctness

We will split this proof into two parts. The first will prove that assistant will be able to follow the instructions, and the second part will prove that the magician can correctly guess the selected card.

Choosing \(c_s\) and \(p\)

There are at least \(n - s\) cards greater than or equal to \(c_s\): \(\{c_i \mid s \le i < n\}\).

There are at least \(s\) cards smaller than \(c_s\): \(\{c_i \mid 0 \le i < s\}\).

\[\begin{align} s \le & c_s \le N - (n - s) \\ s \le & c_s \le n! - 1 + s \\ 0 \le & c_s - s \le n! - 1 \\ \left\lfloor \frac{0}{n} \right\rfloor \le & \left\lfloor \frac{c_s - s}{n} \right\rfloor \le \left\lfloor \frac{n! - 1}{n} \right\rfloor \\ 0 \le & \left\lfloor \frac{c_s - s}{n} \right\rfloor < (n - 1)! \label{card_trick:bound} \end{align}\]We can now see from the definition of \(p\) that \(0 \le p < (n - 1)!\) and therefore \(p\) can be encoded as a permutation of \(n - 1\) cards. In addition, \(s\) is a number taken modulo \(n\) thus \(0 \le s < n\) and therefore it is always possible to select a card \(c_s\).

Determining \(c_s\)

Define \(q\) and \(r\) as follows:

\[c_s - s = q n + r \text{ s.t. } 0 \le r < n \label{card_trick:chi1def}\]Using the definition of \(s\) and \(s^\prime\):

\[\begin{align} c_s + s^\prime \equiv & s \pmod n \notag \\ c_s - s \equiv & -s^\prime \pmod n \notag \\ q n + r \equiv & -s^\prime \pmod n \notag \\ r \equiv & -s^\prime \pmod n \notag \\ r = & -s^\prime \mod n \text{ because } 0 \le r < n \end{align}\]Rearranging to solve for \(q\) we find that:

\[q = \frac{c_s - s - r}{n} = \left\lfloor \frac{c_s - s}{n} \right\rfloor = p\]Substituting our calculated values for \(q\), \(r\) and using the equation for \(\chi\):

\[\begin{align} c_s - s = & p n + (-s^\prime \mod n) = \chi \notag \\ c_s = & \chi + s \end{align}\]Given this it is sufficient to show that \(k = s\) is a unique solution to the equation for \(c_s\) used by the magician.

Let \(k = s + \Delta\):

\[\begin{align} & c_{s+\Delta-1}^\prime < \chi + s + \Delta < c_{s+\Delta}^\prime \\ \implies & c_{s+\Delta-1}^\prime < c_s + \Delta < c_{s+\Delta}^\prime \\ \implies & c_{s-1} < c_s < c_{s+1} & \text{ if } \Delta = 0 \\ c_{s+\Delta} < c_s + \Delta & \text{ if } \Delta > 0 \\ c_s + \Delta < c_{s+\Delta} & \text{ if } \Delta < 0 \\ & \text{ using } \notag \\ & c_i^\prime = c_i & \text{ where } i < s \\ c_{i+1} & \text{ where } i \ge s \\ & c_{i+j} \ge c_i + j \text{ where } 0 \le i+j < n \end{align}\]Only the \(\Delta = 0\) case is true and therefore \(k = s\) is the unique solution. Thus the magician correctly calculates \(c_s\).

Proof of optimality

The maximum number of different messages we can send the magician is limited by \(n!\). We have two choices:

- The card to select of which there are \(n\) options.

- The order of the remaining \(n - 1\) cards of which there are \((n - 1)!\) options (permutations).

This gives a total of \(n (n - 1)! = n!\) options. In addition, the magician will be able to see the \(n - 1\) cards in the pile.

This results in a upper-bound for the card deck of \(N = n! + n - 1\) cards.

Highest card

I challenge you to a game. I will deal four cards (from a regular 52 card deck), chosen randomly, face down. You get to look at #1 first and decide whether to keep it. If not, look at #2 and decide whether to keep that one. If not look at #3, and decide. If you don’t take that, then #4 is your choice.

If the value of your chosen card is \(n\), I will pay you $\(n\). I tell you that each game costs $10 to play, and we can play as many games as you like.

Note: The value of Jack, Queen and King are 11, 12 and 13 respectively. The value of the rest of the cards are their face value, with the Ace worth 1.

Can you come up with a strategy that will let you come out on top?

Use the following strategy:

- Keep the first card if it is 10 or greater, otherwise go to the second.

- Keep the second card if it is 9 or greater, otherwise go to the third.

- Keep the third card if it is 8 or greater, otherwise choose the fourth.

This will come out on ahead, on average.

Proof

Let us initially assume that there is an infinite deck for simplicity. There are an equal number of all face values, and now each card is independent of the previous ones.

For the 4th card the expected value is:

\[E_4 = \frac{1}{13} \sum_{i=1}^{13} i = 7\]For the 3rd card, if it is more than \(E_4\), we should keep it. Otherwise, look at the fourth. Thus the expected value is:

\[E_3 = \frac{1}{13} \sum_{i=8}^{13} i + \frac{1}{13} \sum_{i=1}^{7} E_4 = 8.615\]Likewise for the 2nd card, if it is more than \(E_3\), we should keep it. Otherwise, look at the third, giving the expected value is:

\[E_2 = \frac{1}{13} \sum_{i=9}^{13} i + \frac{1}{13} \sum_{i=1}^{8} E_3 = 9.533\]And finally, for the first card:

\[E_1 = \frac{1}{13} \sum_{i=10}^{13} i + \frac{1}{13} \sum_{i=1}^{9} E_2 = 10.138\]Thus our strategy gives us an expected value of $10.138 per game. Note that we assumed that there was an infinite number of cards. In reality, when a card is chosen, it wont appear again. Since we only reject low cards, this reduces our chances of getting more low cards. Thus our proof gives a lower bound on the expected value

This gives us an expected value of \(> $10\), letting you come out ahead.

In general, our strategy generalises to \(n\) initial cards with:

\[\begin{align} E_n & = 7 \\ E_{i-1} & = \frac{1}{13} \left( \lfloor E_i \rfloor E_i + \sum_{i=\lfloor E_i \rfloor + 1}^{13} i \right) \end{align}\]Pocket kings drawing dead

Tex is playing Texas Hold ‘Em, and was on an amazing winning streak. The gods of poker were chatting and came up with a diabolical plan to frustrate Tex.

“How about drawing dead before the flop?” asked the first god.

“Better yet, let’s give him pocket kings drawing dead!” replied the second.

“Brilliant,” exclaimed the first god, “But there are only six opponents, how can we do it?”

“Like this…”

Give a set of seven 2-card hands, including the pocket kings for Tex, such that Tex is drawing dead before the flop (he has zero chance of winning any portion of the pot).

The following hands result in Tex drawing dead:

| Tex | K\(\heartsuit\) K\(\diamondsuit\) |

| P1 | K\(\clubsuit\) A\(\diamondsuit\) |

| P2 | A\(\heartsuit\) K\(\spadesuit\) |

| P3 | A\(\clubsuit\) A\(\spadesuit\) |

| P4 | Q\(\heartsuit\) Q\(\diamondsuit\) |

| P5 | 7\(\heartsuit\) 7\(\diamondsuit\) |

| P6 | 7\(\clubsuit\) 7\(\spadesuit\) |

Proof

Tex can’t win on a pair because P3 already has a higher pair (pair of

As). In addition, no pair of As can come out on the board

because all As are accounted for.

Tex can’t win on a three-of-a-kind because all the other K are

accounted for. Three of any other card would result in a full house discussed

later.

Tex can’t win on a straight. If 2 to 6 comes up on the board,

P5 and P6 split the pot because they both will have 3 to 7

straights. If 8 to Q comes up on the board, P2 will win with a

10 to A straight. No other straights can come up on the board.

Tex can’t win on a flush. All the As are accounted for by other

players, so they will have a higher flush than anyone.

Tex can’t win on a full house. If three of some card come up on the board, P3

will win with a higher full house. Tex can’t get another K as they are

all accounted for.

Tex can’t win on a four-of-a-kind. If a four-of-a-kind comes up on the

board P1, P2 and P2 will split the pot because all have the highest kicker,

A. Tex can’t get four Ks as all the other Ks are

accounted for.

Second black ace

You have in front of you a shuffled deck of cards, face down. You get to choose a card from any position. Which card should you pick to maximize your chance of selecting the second black ace from the top.

Select the last (52nd) card.

Proof

Each position has an equal chance of being a black ace (since the deck is well shuffled). However, for all but the last card, some of the probability is that it is the first black ace. Thus the last card has the highest probability of being the second black ace.

Around the Earth

A rope with zero elasticity is placed tautly around the Earth’s equator.

Assume the Earth is a perfect sphere of radius 6378km.

Rope ring

How much longer would the rope need to be so that it can be form a ring 1m above the ground everywhere.

\(2 \pi \approx 6.28\) meters of rope is required.

Proof

Let \(c\) be the original length of rope, \(r\) the radius of the earth.

\[c = 2 \pi r\]Let \(\Delta c\) and \(\Delta r\) be the added rope and radius respectively.

\[\begin{align} c + \Delta c & = 2 \pi (r + \Delta r) \\ 2 \pi r + \Delta c & = 2 \pi r + 2 \pi \Delta r \\ \Delta c & = 2 \pi \Delta r \end{align}\]Thus the change in the length of rope is \(2 \pi \Delta r\) regardless of the original radius! In our problem \(\Delta r = 1\) m.

Pulled rope

Suppose instead we only want to pull the rope taut so that it was 1m high at one point. How much rope is required for this?

Adding 0.75 mm is sufficient to lift the rope up 1m!

Proof

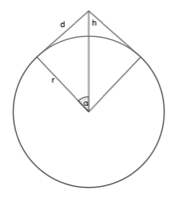

The length of the rope can be given by \(2 (\pi - \alpha) r + 2d\), thus the extra rope required is:

\(\Delta c = 2 (\pi - \alpha) r + 2d - 2 \pi r = 2 d - 2 \alpha r\) where \(\alpha = \arctan\left(\frac{d}{r}\right)\)

We can calculate \(d\) using Pythagoras’ forumla:

\[d^2 + r^2 = (r+h)^2\] \[d = \sqrt{2rh + h^2}\]Combining:

\[\Delta c = 2 \sqrt{2rh + h^2} - 2 r \arctan\left(\frac{\sqrt{2rh + h^2}}{r}\right)\]Using \(r = 6378 \text{ km}\) and \(h = 0.001 \text{ km}\):

\[\Delta c \approx 7.5 \times 10^{-7} \text{ km} = 0.75 \text{ mm}\]Belt loop

A thin belt is stretched around three pulleys, each of which is 1 m in diameter. The distances between the centers of the pulleys are 3 m, 4 m, and 6 m. How long is the belt?

The belt can be divided into straight and curved sections. The lengths of the straight sections are the same as the distances between the centers of the pulleys, and the curved sections total one complete circumference. So the total length is \(3 + 4 + 6 + \pi \approx 16.1\) m.

Buried powerline

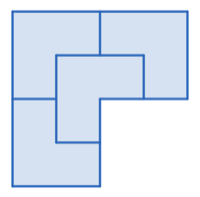

There is a square plot of land with 1 km long sides. A perfectly straight powerline runs underneath through the plot, but we don’t know where it enters or exits.

You want to find any part of the powerline. You could dig around the entire perimeter (4 km), but that would be wasteful as you could get the same result by only digging up 3 sides of the square (3 km).

Can you do better?

We can trivially do better by cutting an X through the plot, cutting lines from opposite corners. This gives a length of \(2 \sqrt{2} \approx 2.83\) km.

We can do better by cutting two adjacent sides, then cutting a line from the opposite corner to the center. This gives a length of \(2 + \frac{\sqrt{2}}{2} \approx 2.71\) km.

We can improve this slightly by bringing in the two lines along the side until they meet at 120 degrees:

The short diagonal has a length of \(a = \frac{\sqrt{2}}{2}\). This gives a total length of \(2 \frac{2 a}{\sqrt{3}} + 2 a - \frac{a}{\sqrt{3}} \approx 2.64\) km.

(This may not be optimal.)

Cold walks

Which places on the earth can you walk one kilometer south, then one kilometer west, then one kilometer north and end up in the same spot?

Assume the earth is a perfect sphere and oceans or mountains don’t get in your way.

North pole

The simplest place with this property is the north pole. After you walk south, any amount of distance walking west will leave the north pole one kilometer north of you.

Other solutions

However, there are also an infinite number of places near the south pole with this property. Consider the circle centered on the south pole, with a circumference of one kilometer. Walking west for one kilometer from anywhere on this line will return you to the same position. Thus if we start one kilometer north of this circle, then we also satisfy the problem.

Walking once around the south pole is not the only way to stay in the same place. We could choose a smaller circle such that walking west one kilometer would circle the south pole \(n\) times, for any positive integer \(n\).

The circumference of a circle on a sphere is given by \(c = 2 \pi R \sin\left(\frac{r}{R}\right)\) where \(R\) is the radius of the sphere (the Earth). Since the circumference must be \(\frac{1}{n}\) we have:

\[r = R \arcsin\left(\frac{1}{2 \pi R n}\right)\]Since we need to start one kilometer north of any of these circles, that means that we need to start at any point:

\(\left(R \arcsin\left(\frac{1}{2 \pi R n}\right) + 1\right)\) kilometers north of the south pole for any positive integer \(n\).

Colored plane

Show that if each point in the plane is colored with 3 different colors, a unit segment must exist whose endpoints are the same color.

Set up two equilateral triangles ABC and BCD with unit sides which share a side. Let the color of A be green.

If B or C are green then we are done. Likewise if D is the same color as B or C.

This leaves the case where D is green. Since the triangle can be oriented in any direction, all points the same distance from A as D is colored green. This describes a green circle.

The circles has a larger than unit diameter, and thus there are an infinite number of points on the circle which are a unit distance from each other.

Finding the river

You are dropped on the surface of a very large planet, which has a single river which runs perfectly straight around the equator. You begin to explore the planet, in your dune buggy, by driving in a straight line away from the river, but a tornado hits you spinning you around and knocking you unconscious.

When you awake, you’ve forgotten which direction you had been travelling in. You do know however that you travelled 1000 km. You have an accurate compass and odometer on board. The river is in a canyon, and you can’t see it until you reach it.

You have enough fuel to go 6400 km. Can you make it back to the river (any point on the river)?

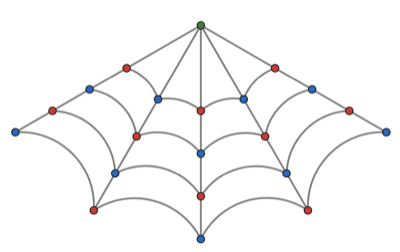

From point \(O\) travel:

- 1155 km to point \(A\)

- 577 km to point \(B\)

- 3665 km around the radius of the circle to point \(C\)

- 1000 km to point \(D\)

This will guarantee to come across the river.

Proof

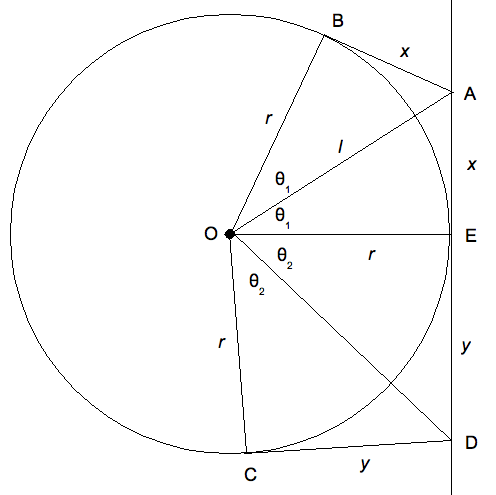

Let the distance to the river be \(r\). The problem is equivalent to finding a curve that intersects with all of the tangents of a circle of radius \(r\).

To minimise the distance travelled we want to:

- Go directly to some tangent line (all are equivalent by symmetry)

- Follow it until we reach the circle

- Follow the circle around for some distance

- Then go to the closest point on our original tangent.

We will pick some arbitrary tangent, as all are equivalent. We choose the vertical tangent on the right side.

We need to head to some point \(A\) on the tangent, which we will say is an \(\theta_1\) from the horizontal. The length of the line from \(O\) to \(A\) is given by:

\[l = \frac{r}{\cos \theta_1}\]Next we travel from \(A\) to be \(B\), and this distance is given by:

\[x = r \tan \theta_1\]Next we travel around the circle from \(B\) to \(C\). This covers and angle of \(2 \pi - 2 \theta_1 - 2 \theta_2\). This gives adds a distance of:

\[a = 2 (\pi - \theta_1 - \theta_2) r\]Finally, to head from point \(C\) to point \(D\):

\[x = r \tan \theta_2\]This gives a total distance of:

\[\begin{align} d & = l + x + c + y \notag \\ & = r \left( \frac{1}{\cos \theta_1} + 2 (\pi - \theta_1 - \theta_2) + \tan \theta_1 + \tan \theta_2 \right) \end{align}\]We wish to minimise \(d\) thus we require that both of the following hold:

\[\begin{align} \frac{\partial d}{\partial \theta_1} & = 0 \\ \frac{\partial d}{\partial \theta_2} & = 0 \end{align}\]Solving for \(\theta_1\):

\[\begin{align} \frac{\partial d}{\partial \theta_1} & = r \left( \frac{\sin \theta_1}{\sin^2 \theta_1} - 2 + (1 + \tan^2 \theta_1) \right) \\ 0 & = \frac{\sin \theta_1}{\sin^2 \theta_1} + \tan^2 \theta_1 -1 \\ & = -\frac{2 \sin \theta_1-1}{\sin \theta_1-1} \\ & = 2 \sin \theta_1-1 \text{ where } \sin \theta_1 \ne 1 \\ \sin \theta_1 & = \frac{1}{2} \\ \theta_1 & = \frac{\pi}{6} \end{align}\]Solving for \(\theta_2\):

\[\begin{align} \frac{\partial d}{\partial \theta_1} & = r \left(- 2 + (1 + \tan^2 \theta_2) \right) \\ 0 & = \tan^2 \theta_2 - 1 \\ \tan \theta_2 & = 1 \\ \theta_2 & = \frac{\pi}{4} \end{align}\]Substituting \(\theta_1\) and \(\theta_2\) into the equation for \(d\):

\[\begin{align} d & = r \left( \frac{2}{\sqrt{3}} + \frac{7 \pi}{6} + 1 + \frac{1}{\sqrt{3}} \right) \\ & = r \left( \sqrt{3} + 1 + \frac{7 \pi}{6} \right) \end{align}\]For our problem, \(r = 1000\) this gives a total distance of:

\[d \approx 6397 \text{ km}\]Ghost ships

Four ghost ships - \(A\), \(B\), \(C\) and \(D\) - sail on a night so foggy that visibility is nearly zero. All ships sail in a straight line, changing neither speed or heading. They have been sailing this way for a long time, and all have different headings.

Sometime in the night \(A\) collides with \(B\), but they pass through each other since they are ghost ships. However as they pass \(A\)’s captain hear \(B\)’s exclaim that it was their third collision that night.

Later in the night \(A\) run into \(C\) and hears the same exclamation from \(C\)’s captain.

Will \(A\) hit \(D\)?

\(A\) will definetly hit \(D\).

Proof

Plot the path of the ships in 3 dimensions, with time as the third dimension.

The paths of ships \(B\) and \(C\) form a plane (since they are different headings).

The path of \(A\) is on the same plane since it intersects with both \(B\) and \(C\). Likewise for \(D\). Hence the paths of \(A\) and \(D\) are co-planar.

\(A\) and \(D\) are on different headings, hence their paths intersect. They’ve both been sailing for a long time, hence their paths don’t intersect in the path, so they must intersect in the future.

Lake monster

You are in a row boat in the middle of a circular lake. On the shore of the lake is a monster who will eat you if it catches you. Luckily, the monster can’t swim and, on land, you can run faster than the monster. You are safe while you are in the boat, and if you reach the shore ahead of the monster then you can escape. Both you and the monster can turn instantaneously.

The monster can run 4 times as fast as you can row. How can you escape?

Let \(r\) be the radius of the lake.

First note that the simple strategy of rowing directly away from the monster fails. You need to travel a distance of \(r\), the monster needs to travel \(\pi r\) but does so 4 times faster.

However, you can travel further away from the center while keeping the monster on the opposite side. If you are closer than \(\frac{r}{4}\) from the center then you can row in a circle faster than the monster can circle the lake.

So first row to a distance of \(\frac{r}{4}\) from the center while keeping the monster opposite you. From there you can row directly to the closest shore. The monster will still have to travel a distance of \(\pi r\), but you only have to travel \(\frac{3}{4} r\).

Even though you are 4 times slower, you will reach the shore first because \(\pi r < 4 \times \frac{3}{4} r = 3 r\).

Extension

This strategy works as long as the monster is no more than \(\pi + 1 \approx 4.14\) times faster.

However, this strategy is not optimal and you can escape a monster who is up to ~4.6 times faster. For a proof see this blog post.

Planet shadow

A number of stationary perfectly-spherical planets of equal radius are floating in space. The surface of each planet includes a region that is invisible from the other planets. Show that the sum of these regions is equal to the surface area of one planet.

Fix any direction and call it “north.” Look at the north poles of all planets. A north pole is private if and only if there are no planets further to the north. Therefore, only the northernmost planet has a private north pole.

Since north was arbitrary, this is true for any direction. If we take the surface a single planet, every point on that planet corresponds to a direction. Thus each point on this surface maps to exactly one hidden point - the hidden point for that direction. Similarly, all hidden points map to a unique direction and thus a unique point on the surface of the planet.

Points and coins

I draw 10 points on a piece of paper. I give you 10 coins of the same size. Show that you can always cover all the points using the coins, without any coins overlapping.

First note that it is not true in general if we have \(n\) points and \(n\) coins. Any layout of coins will always have gaps, and will fail to cover a sufficiently dense set of points.

Instead consider a infinite hexagonal packing of circles. The density of such a packing is \(\frac{\pi}{2\sqrt{3}}\). Take a randomly translated close packing. Then:

\[\begin{align} P(\text{At least one point is not covered}) \le & \sum_{i=0}^{10} P(\text{The } i\text{th point is not covered}) \\ = & 10 \frac{\pi}{2\sqrt{3}} \\ \le & 9.069 \\ < & 1 \end{align}\]Note that this is true regardless of the covariance of the probabilities. Covariance can only lower the calculated probability.

Since there the probability is less than 1, there is some probability that a random translation of the hexagonal packing will cover all the points. If there were no valid translations, then the probability would be 0, thus a convering translation must exist.

Finally, we note that at most 10 of the coins in the infinite packing must be covering points because each point can only be under at most one coin. Thus we only need to use 10 coins.

Worm and the apple

There is an apple with radius 31 mm. It is in the shape of a perfect sphere. A worm gets into the apple and eats a tunnel of total length 61 mm, and then leaves the apple. (The tunnel need not be a straight line.)

Prove that you can cut the apple with a straight slice through the center so that one of the two halves is not eaten.

First consider the problem for a circle instead of a ball.

Let \(A\) and \(C\) be the entry and exit points of the worm. Let \(B\) be the point opposite the worm’s entry point.

Note that the worm can never travel from \(A\) to be \(B\) as the distance is 62 mm.

Draw a line that bisects \(C\) and \(B\) and goes through the circle’s center (hence cuts the circle in two). By construction, all points on the line are equally far from \(C\) and \(B\). Thus if the tunnel touches the line, then it could also have reached \(B\). Since a tunnel between \(A\) and \(B\) is impossible, the tunnel cannot touch the line.

Cutting the apple along the line leaves the tunnel completely on one side, leaving the other side untouched by the worm.

For a sphere, replace the bisecting line with a bisecting plane where all points on the plane are eqi-distant from the points \(B\) and \(C\). The rest of the proof carries through.

10 wires

10 wires run underground between two buildings. Unfortunately, they are unlabelled so you don’t know which ends match up. You need to label the wires for the wires to be useful.

You only have a battery and lightbulb as equipment.

How many trips do you need to make between the two buildings to label all the wires?

You only need to make one round trip.

Proof

Starting in the first building number:

- Label 1 wire with \(A\).

- Connect the next 2 wires and label them with \(B\).

- Connect the next 3 wires and label them with \(C\).

- Connect the remaining 4 wires and label them with \(D\).

This accounts for all the wires as \(1+2+3+4 = 10\).

Now go to the second building. Using the battery and lightbulb, test every pair of wire against the others (45 tests) to check if they are connected.

Label the wire that is not connected to any other wire as \(A\), the wires that are only connected to each other as \(B\), the group of 3 connected wires as \(C\), and the group of 4 connected wires as \(D\).

Update the labels as follows:

- Take one wire from each group and connect them together. Append \(A\) to their labels - this will result in labels \(AA\), \(BA\), \(CA\) and \(DA\).

- Take another wire from each group (which has not yet been updated) and connect them together. Append \(B\) to the label. Only 3 of the groups will have a spare wire for this.

- Continue in this way connecting wires, appending \(C\) and \(D\) in turn. Each will have one less group with spare wires to update.

As before this accounts for all the wires as \(1+2+3+4 = 10\). Each wire now has a unique 2 letter label, because each wire in a given group got a different letter appended.

Now return to the first building. Disconnect the original connections. Using the battery and lightbulb, test every pair of wire against the others (45 tests) to check if they are connected.

Append \(D\) to the wire that is not connected to any other wire, \(C\) to the wires that are only connected to each other, \(B\) to the group of 3 connected wires, and \(A\) to the group of 4 connected wires.

The labels of the wires in the two building should now match, with each wire having a unique 2 letter label.

This strategy works for any triangular number numbers of wires.

3 prisoners in a line

3 prisoners are brought to a room with 3 red hats and 2 blue hats. They are told to line up single file facing forward, so that each prisoner can only see those in front of them. They are blindfolded and a hat is placed on each prisoners head. The remaining hats are disposed of.

The blindfolds are removed and the prisoners are told that the first person to correctly call out the colour of their hat will go free. They have one minute.

For a while the prisoners stand silent, but just before the minute is over the prisoner at the front correctly names the colour of their hat.

What was the colour of the hat on the prisoner at the front?

The prisoner at the front had a red hat.

Proof

The prisoner at the back can see the colours of the other two prisoners. If the hats were both blue then the prisoner could deduce that their own colour was red, and they would instantly say this. Since the prisoner at the back remained quiet we (and the two prisoners in front) know that least one of the front two prisoners has a red hat.

The middle prisoner can only see the colour of the front prisoner’s hat. If it was blue then the prisoner could deduce that their own hat colour was red. However, they do not speak. This means that the colour of the hat on the front prisoner must be red.

3 switches and a lightblub

You are in a room with 3 switches. One of the switches controls a lamp with an incandescent lightbulb in a nearby room. You may flip the switches as many times as you like. You may only enter the room with the lamp once. You cannot see if the lamp is on from the room with the switches.

How can you determine which switch controls the lamp?

Number the switches #1, #2 and #3. Use the following strategy:

- Turn on switch #1.

- Wait a suitably long time (we will arbitrarily choose one hour).

- Turn off switch #1.

- Turn on switch #2.

- Enter the room with the lamp.

-

Examine the lamp:

- If the lamp is on, switch #2 controls the lamp.

- If the lamp is off, but the lightbulb is warm to the touch, switch #1 controls the lamp.

- Otherwise switch #3 controls the lamp.

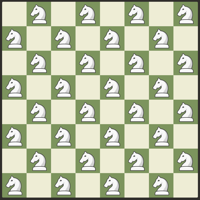

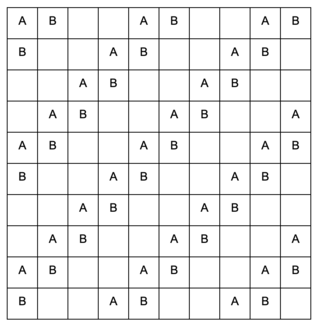

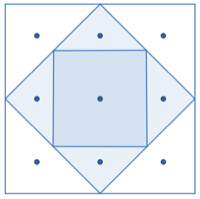

Attacking knights

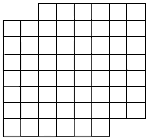

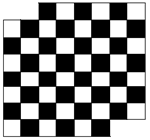

What is the maximum number of knights which can be placed on a chess board with none of the knights attacking each other.

32 knights:

All the knights are on black squares, and knights on black squares can only attack white squares.

Proof

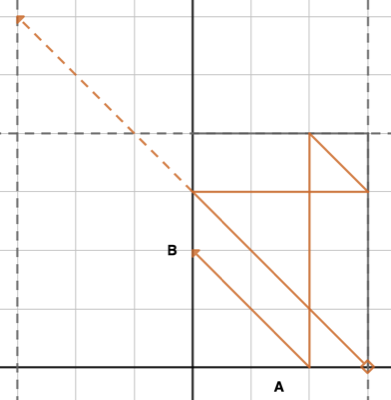

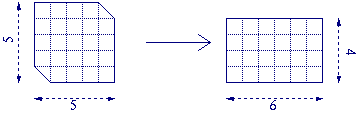

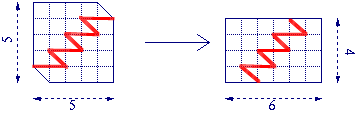

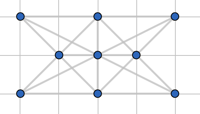

Pair up squares as in the following diagram. Paired squares are identified by the same piece.

A knight can only be placed on one of each pair of squares. Since this arrangement can be tiled across the entire chessboard, the knights can take up at most half the chess board: 32 squares.

Calendar cubes

Number the faces of two cubes so that they can always be placed side-by-side to show the current day of the month.

We need to be able to represent all numbers for 01 to 31.

0, 1 and 2 need to be paired with every number hence need to be on both cubes. The remaining numbers don’t need to be paired with each other, thus can be placed on either of the cubes.

This leaves us 6 remaining faces, but we have 7 remaining numbers. We can take advantage of the fact that the same face can represent both 6 and 9 by placing the face upside down.

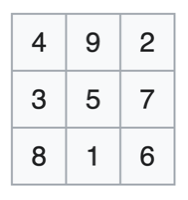

Thus the following layout works:

- Cube 1: 0, 1, 2, 3, 4, 5

- Cube 2: 0, 1, 2, 6/9, 7, 8

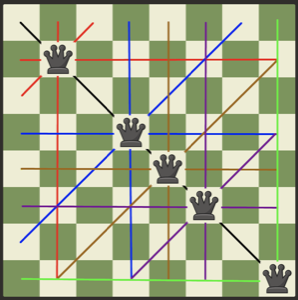

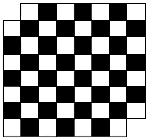

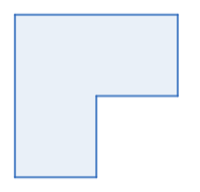

Covering queens

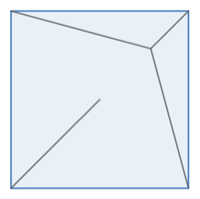

Place 5 queens on a chessboard so that they attack every square.

There are several possible solutions. Here is one:

Note: A 4-queens solution is not possible, but the proof requires brute-force.

Dice groups

On a table in front of you there are two groups of 5 dice each. One of the groups adds to 12 and the other to 16. You are blindfolded and asked to create two piles which add up to the same number.

How can you do it?

Move a die from one pile to the other, so you have a pile of 6 dice and a pile of 4 dice. Flip all the dice in the pile of 4. The two piles will have the same sum.

Proof

First note that opposite sides of a dice add up to 7. Thus flipping the dice changes the value from \(x\) to \(7-x\). Filling a group of \(n\) dice changes the total from \(x\) to \(7n-x\).

The sum of the all the dice in front of you is 28. Thus if the total of one group is \(x\) then the total of the other group would be \(28-x\). If a group only had 4 dice then we could make the groups equal by flipping the 4 dice. The flipped group would have a total of \(7\times4-(28-x) = x\).

If we shift a die from one group to the other the overall total remains 28, but now we have a group of 4 which we can flip.

Flipping coins

On a table in front of you there are 100 coins, 20 heads up and 80 tails up. You are blindfolded and asked to create two groups of coins each with an equal number of heads up.

How can you do it?

Arbitrarily split the coins up into a group of 20 and a group of 80. Now flip all the coins in the group of 20. Now both groups have the same number of heads up.

Proof

Let the number of coins in the group of 80 be \(h_{80}\).

There are 20 coins with heads up initially, so immediately after we split up the groups, the number of heads in the group of 20 is \(20 - h_{80}\).

When we flip the coins in the group of 20, the number of heads in the group becomes \(20 - (20 - h_{80}) = h_{80}\). Thus now the two groups have the same number of heads showing.

Gold chain

You want to stay at an inn for seven nights. You only have a gold chain with seven links. The innkeeper agrees to accept this in payment for the stay, but you only want to pay for one night at a time.

You start to cut a link, but the innkeeper wants you to minimize the damage to the chain. What is the minimum number of cuts you must make, provided the provided the innkeeper is happy to return previously given links as change?

This is possible in one cut.

Cut a single link, the third one, dividing the chain into lengths of 1, 2, and 4. Then follow this day-by-day plan:

- Give 1 link.

- Give 2 links, get 1 link as change.

- Give 1 link.

- Give 4 links, get 3 links as change.

- Give 1 link.

- Give 2 links, get 1 link as change.

- Give 1 link.

This is equivalent to counting in binary.

Handshakes

A man and his wife are at a party. In the party there are 4 other couples for a total of 5 couples. During the party some people shake hands, however no one shakes hands with their spouse, and no one shakes hands with themselves.

At the end of the party the man asked all the other guests at the party (including his wife) how many different people they shook hands with. Each person tells him a different answer.

How many people did the man shake hands with?

The man shook hands with 4 people.

Proof

Let us label each person with the number of people they have shaken.

Everyone has shaken hands with at most 8 people, since there are only 8 people at the party besides that person and their spouse. So for every number between 1 and 8, there is a person who has shaken that many hands.

#8 must have shaken hands with everyone else other than their spouse. Thus the spouse must be #0, since everyone else has shaken at least one hand (#8’s). By the same logic, since all of the remaining people have shaken hands with #8, and none with #0, #7 must be married to #1. Likewise, #6 is married to #2 and #5 is married to #3.

The only people left are #4 and the man. Thus the man must be married to #4. Thus the man must have shaken hands with #8, #7, #6 and #5, but not #0, #1, #2, #3 or his wife #4. This gives 4 handshakes.

Jewel thieves

Two thieves conspire to steal a valuable necklace made of diamonds and rubies. The jewels are evenly spaced, but the diamonds and rubies are arbitrarily arranged. After they take it home, they decide that the only way to divide the jewels fairly is to physically cut the necklace in half.

Prove that, if there is an even number of diamonds and an even number of rubies, it’s possible to cut the necklace into two pieces, each of which contains half the diamonds and half the rubies.

Note that the necklace must be divided at two opposite points, as each thief will have to end up with the same number of jewels.

Start with arbitrary provisional cutting points which divide the necklace in two. If one side has more diamonds than the other, you rotate the cut by one jewel. If the switched jewels were the same, it changes nothing and you rotate again. If they’re different, the number of diamonds on one side will either go up one or down one. Eventually, by the time you’ve rotated the cut 180 degrees, the sides of the original cut will have swapped.

Since each rotation changes the number by one, and the diffence in the number of diamonds has been negated, then at some point they must have been equal. If the diamonds are equal then the rubies are also equal since each half has the same number of jewels.

Mislabelled marble cans

You have 3 cans. On is labelled “black” and contains only, one is labelled “white” and contains only white marbles, the last is labelled “mixed” and contains both black and white marbles.

All labels switched

Unfortunately, all the labels get switched so that none of the cans have their original label. How many marbles do you have to draw to determine which can is which?

You only need to draw one marble.

Proof

Draw a marble from the can labelled “mixed”.

If you draw a black marble it must be the “black” can since it cannot actually be the “mixed” can. Thus the can currently labelled “black” is the white can, since it cannot be either the “black” or “mixed” cans. Finally the can labelled “white” must be the mixed can.

Similarly if a white marble is drawn, the can labelled “mixed” is really “white”, the can labelled “white” is really “black” and the can labelled “black” is really “mixed”.

Two labels switched

What if only two of the labels were switched and one remained the same? How many marbles do you need to draw now?

You need to draw two marbles.

Proof

First note that one marble is not sufficient since there are 3 possible swaps. Single marble can only distinguish between two possibilities.

Start by drawing a marble from the can labelled “mixed”.

If a white marble draw a marble from the can labelled “black”. If this second marble is white, then the “mixed” can is correct, and the “black” label was swapped with the “white” label. If the second marble is black then the “black” can is correct and the “mixed” was swapped with the “white”.

If the first marble drawn was black then follow the above analysis with black and white reversed.

Marked prisoners

A group of 200 perfect logicians are taken prisoner. Each has either an

X or an O marked on their forehead. They can all see each

other, and the marks on the others’ foreheads. They have no way of seeing

the mark on their own forehead. They are no allowed any communication between

each other at all, on pain of death.

It happens that 100 of the prisoners have the X mark and the other 100

have the O mark. However, none of them know exactly how many people

have the X mark and how many people have the O mark.

Each night, at midnight, any prisoner who can correctly determine the mark

on their forehead may leave. They know their marks are either X or

O.

One day a guard being careless and was accidentally heard saying:

“I can see someone with an X mark on their forehead”

He is quickly disciplined, but everyone heard what he said.

Who leaves the prison, and on what night?

On the 100th day, all 100 prisoners with an X mark will leave. On

the day after 101st day, the 100 remaining prisoners with the O mark

will leave.

Proof

In the case of one prisoner with the X mark, he looks around and sees

that no one else has an X. Thus he is the only one the guard could

be referring to. Thus we have:

Theorem 1: If there is only one prisoner with an

Xmark, he leaves on the first night.

Let us set up the hypothesis that:

Hypothesis 1: If there are \(n\) prisoners with the

Xmark, nothing will happen for the first \((n-1)\) nights, then they will all leave on the \(n\)th night.

If there are \((n+1)\) prisoners with the X mark, then they all see \(n\)

other prisoners with the X mark. Thus they can all deduce that there

must be either \(n\) or \((n+1)\) prisoners with the X mark.

If we assume Hypothesis 1, then on the first \(n\) nights nothing will

happen. After the \(n\)th night they will be determine that there can not be

exactly \(n\) prisoners with the X mark. Hence they can all deduce that

they each have an X mark and they will all leave on the next night,

the \((n+1)\)th night. Thus we have:

Theorem 2: If Hypothesis 1 is true for a given \(n\) then it is also true for the case \((n+1)\).

We can see that Hypothesis 1 is true for the case \(n = 1\) by Theorem 1. Then using induction with Theorem 2 we can see that Hypothesis 1 is true for all \(n \ge 1\).

In our problem, we have the case where \(n = 100\) thus all the prisoners

with the X mark will leave on the 100th day. The prisoners with the

O mark will see them leave and can deduce that they do not have an

X. Since O is the only other option they will leave on the

next day, the 101st day.

Extra Consideration

What is the piece of information that the guard provides that each prisoner did not already know?

Each prisoner already knew that there was at least one X marked

prisoner. They also all knew they everyone else knew, and they everyone knew

that everyone knew. However this does not continue indefinitely.

In our problem, everyone knew that there were at least 99 X marked

prisoners. But when we get to meta-knowledge, we can only say that everyone

knew that everyone knew that there are at least 98 X marked

prisoners. Continuing further, our strongest statement of meta-meta-knowledge

is that, everyone knew that everyone knew that everyone knew that there are at

least 97 X marked prisoners.

This continues until we can’t say anything about the number X marked

prisoners.

When the informer speaks, the fact that there is at least 1 X marked

prisoner becomes common knowledge. Importantly, now everyone knows that

everyone knows that there is at least X marked prisoner. And everyone

knows that everyone knows that everyone knows… and so on indefinitely.

Sufficiently deep in this meta-knowledge, this adds new information. This is known as Common knowledge.

Made famous as xkcd’s Blue Eyes puzzle (solution).

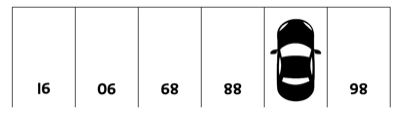

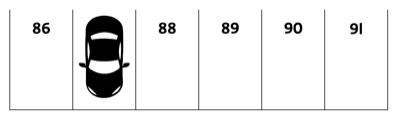

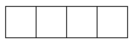

⇪Parking lot

What is the number of the parking space that the car is occupying?

The car in space 87.

This becomes more obvious if we turn the image upside down. The lots are actually numbered from 86-91:

Party hats

There is a party of logicians, and just towards the end of the party the host seats everyone down in a circle. They puts a hat on each person’s head such that everyone else could see it but the person themselves could not.

There were many, many different colours of hats. The host instructed them that a bell would be rung at regular intervals: at the moment when a person knew the colour of their own hat, they must leave at the next bell.

As they were all very good logicians, leaving at the wrong bell was unacceptable and would be disastrous to their reputations. The host reassures the group by stating that the puzzle would not be impossible for anybody present.

How did they all leave?

First we determine that there must be at least 2 of each hat colour. If there was a person with a unique hat colour, then they could in no way determine that their was even a valid colour. However, the host said that everyone has enough information to solve the puzzle and leave. Thus there must be at least two people with each hat colour.

We now form a hypothesis and prove it via induction:

Hypothesis: If there are \(n\) people with a hat of colour \(c\), then they will all leave on the \((n-1)\)th bell.

If there are only two people for a given colour \(c\), then both of them will count only one hat of colour \(c\) in the group. Thus they will both know that their own hat colour must also be \(c\). Therefore they both leave on the first bell, satisfying the Hypothesis.

Suppose there are \(n\) people with hats of a given colour \(c\), and suppose the Hypothesis is true for \(n-1\). Then everyone with hat colour \(c\) will see \(n-1\) people with colour \(c\). On the \((n-1)\)th bell none of them will leave, thus they will all conclude there must be more than \(n-1\) people with a hat of colour \(c\). Thus they will determine that they must have a hat of colour \(c\).

Therefore, because the Hypothesis is true for \(n=2\), and the Hypothesis being true for \(n-1\) implies it is true for \(n\), we have that the Hypothesis is true for all \(n \ge 2\).

Therefore, everyone eventually leaves. (Compare this with Marked prisoners).

Sorting hats

A group of prisoners are offered a chance of freedom. The prisoners will be brought in one at a time, and a black or white hat will be placed on their heads as they come in.

The prisoners must form a line with white hats on one side and black hats on the other. The prisoners can’t communicate, and can only choose their position in the line.

How can the prisoners succeed?

As each prisoner enters, they simply join the line at the point between an adjacent black and white hat. If there are only hats of one color they can join at either end of the line.

This always preserves the property that the line is always valid at all times. Note, that the prisoners don’t need to communicate beforehand.

Spinning room

You are trapped in a small square room. In the middle of each side of the room there is a hole. In each hole there is a push button that can be in either an off or on setting. You can’t see in the holes but you can reach your hands in them and push the buttons. You can’t tell by feel whether they are in the on or off position.

You may stick your hands in any two holes at the same time and push either, or both of the buttons as you please. Nothing will happen until you remove both hands from the holes. You succeed if you get all the buttons into the same position, after which time you will immediately be released from the room.

Unless you escape, after removing your hands the room will spin around, disorienting you so you can’t tell which side is which.

How can you escape?

Do the following in order until you escape:

- Push buttons on two opposite walls.

- Push buttons on two adjacent walls.

- Push buttons on two opposite walls.

- Push any one button.

- Push buttons on two opposite walls.

- Push buttons on two adjacent walls.

- Push buttons on two opposite walls.

You should be free by the end of the last instruction at latest.

Proof

Let us label all the states we could be in:

- \(A\): Two buttons ON, on opposite sides.

- \(B\): Two buttons ON, on adjacent sides.

- \(C\): Three buttons in the same state, and other other different.

If we push two opposite buttons, then if we are in state \(A\), then we go free. The other states remain the same. Thus first we carry this out, and if we are still in the room, we must be in state \(B\) or \(C\).

Now, if we are in state \(B\) and we push two adjacent buttons, then we either go free or go to state \(A\). But from above we know how to escape from state \(A\). Thus we push two adjacent buttons, then two opposite buttons. If we are still in the room, the starting position must have been state \(C\).

Note that up to this point we have been pushing two buttons at a time, so this always leaves state \(C\) the in state \(C\).

Now we know that we are in state \(C\). Pushing any one button will convert the state to either \(A\) or \(B\). But we know how to escape for \(A\) or \(B\), so we just repeat the procedure above.

In summary the possible states we are in after each step are:

\(\{A,B,C\}\) \(\xrightarrow{1} \{B,C\}\) \(\xrightarrow{2} \{A,C\}\) \(\xrightarrow{3} \{C\}\) \(\xrightarrow{4} \{A,B\}\) \(\xrightarrow{5} \{B\}\) \(\xrightarrow{6} \{A\}\) \(\xrightarrow{7} \{\}\)

Short and tall

Two hundred students are arranged in 10 rows of 20 children. The shortest student in each column is identified, and the tallest of these is marked \(A\). The tallest student in each row is identified, and the shortest of these is marked \(B\).

If \(A\) and \(B\) are different people, who is taller?

\(B\) is taller.

Proof

There are three different cases:

-

If \(A\) and \(B\) stand in the same row, then \(B\) is taller, since we know that \(B\) is the tallest student in their row.

-

If \(A\) and \(B\) stand in the same column, then again \(B\) is taller, since we know that \(A\) is the shortest student in their column.

-

If \(A\) and \(B\) share neither a row nor a column, then let \(C\) be the student who’s in the same column as \(A\) and the same row as \(B\). Then \(C\) is shorter than \(B\) (who is the tallest in their row) and taller than \(A\) (who is the shortest in their column), so \(B > C > A\).

In every case, \(B\) is taller than \(A\).

The bookworm

A 5 volume set of book sit on a shelf, in order. Each volume is 1 inch thick.

A bookworm eats its way from the front cover of the first volume to the back cover of the last volume. How far did the bookworm travel?

3 inches.

Proof

The front of volume 1 is directly next to volume 2 when arranged on the shelf. Likewise, the back of volume 5 is next to volume 4.

Thus the bookworm does not eat through volume 1 and 5, only 2, 3 and 4. It travels 3 books, thus 3 inches.

Birthday problem

How big does a group of people need to be before there is at least a 50% chance that two people share a birthday.

23 people.

Proof

For simplicity, ignore leap years. This won’t significantly affect the result.

Assume that all birthdays are equally likely. If birthdays are unequally distributed, then it only makes it more likely that people share a birthday.

We will determine the probability that no one shares a birthday with anyone. Adding people one at a time:

- The first person won’t share a birthday (as they are the only person). i.e. they have a \(1 = \frac{365}{365}\) chance of being unique.

- The second person only has to avoid the first person’s birthday for a \(\frac{364}{365}\) chance of being unique.

- The \(n\)th person has to avoid \(365-(n-1)\) days for a \(\frac{366-n}{365}\) chance of being unique.

Then the probability \(P'(n)\) that no one shares a birthday is the product that each individual has a unique birthday:

\[P'(n) = \frac{365 \times 364 \times ... \times (366-n)}{365^n} = \frac{365!}{(365-n)! 365^n}\]The probability that there are two people who share a birthday \(P(n)\) is the complement:

\[P(n) = 1 - \frac{365!}{(365-n)! 365^n}\]23 is the smallest number for which \(P(n) > 0.5\).

Other values

By the pigeonhole principle, you need 366 people to be guaranteed to be two people who share a birthday (367 to include leap years).

However chance climbs very quickly to be close to 1 with much smaller groups:

| n | P(n) |

|---|---|

| 1 | 0 |

| 10 | .117 |

| 23 | .507 |

| 50 | .970 |

| 70 | .999 |

Birthday line

At a movie theater, the manager announces that they will give a free ticket to the first person in line whose birthday is the same as someone who has already bought a ticket. You have the option of getting in line at any time. What position in line gives you the greatest chance of being the first duplicate birthday?

Assume that you don’t know anyone else’s birthday, and that birthdays are distributed randomly throughout the year.

Position 20.

Proof

Your probability of getting a free ticket when you are the nth person is line is (probability that none of the first n-1 people share a birthday) \(\times\) (probability that you share a birthday with one of the first n-1 people):

\[P(n) = \frac{365 \times 364 \times ... \times (365-(n-2))}{365^{n-1}} \frac{n-1}{365}\]We want the first \(n\) such that \(P(n) > P(n+1)\):

\[\begin{align} \frac{365 \times 364 \times ... \times (365-(n-2))}{365^{n-1}} \frac{n-1}{365} & > \frac{365 \times 364 \times ... \times (365-(n-2)) \times (365-(n-1))}{365^n} \frac{n}{365} \\ 365 (n-1) & > (365 - (n-1)) n \\ n^2 - n - 365 & > 0 \end{align}\]Solving for \(n\) we find roots at \(n \approx -18.6\) and \(n \approx 19.6\). The negative root is invalid for this problem.

Thus we need \(n > 19.6\) giving a minimum of \(n = 20\).

Caterpillar on a bungee

A caterpillar crawls along a very flexible 1 m long bungee cord. It starts off at one end of the bungee cord, and starts crawling to the other end. When the caterpillar has crawled 1 cm, the bungee cord extends by a meter to 2 m. When the caterpillar has crawled another 1 cm, the bungee cord extends by another meter to 3 m. This continues on, each time the caterpillar progresses by 1 cm, the bungee cord extends by another meter.

Will the caterpillar ever reach the other side?

Note that as the bungee cord is stretched, the caterpillars relative position on the cord remains the same, its absolute position increases.

Yes, the caterpillar does reach the other side, eventually.

Proof

Because the relative position of the caterpillar remains the same when the bungee cord is stretched, we will look at the position of the caterpillar as a fraction of the bungee cord length, \(L\). It starts at position \(0L\), and must reach position \(1L\).

In the first step, the caterpillar walks \(\frac{1}{100} L\). In the next step, the caterpillar walks \(\frac{1}{200} L\), then \(\frac{1}{300} L\), and so on. The total distance the caterpillar has travelled after \(n\) steps is:

\[\sum_{x=1}^n \frac{1}{100 x} L = \frac{L}{100} \sum_{x=1}^n \frac{1}{x}\]We require the distance to equal \(1L\) for the caterpillar to reach the other side, hence we need some \(n\) such that:

\[\begin{align} L & < \frac{L}{100} \sum_{x=1}^n \frac{1}{x} \\ 100 & < \sum_{x=1}^n \frac{1}{x} \end{align}\]\(\sum_{x=1}^n \frac{1}{x}\) is the harmonic series, which diverges. Thus it will eventually exceed 100 for some (very large) value of \(n\). Therefore the caterpillar will reach the other side. In general, this is true for any length cord.

By the Euler-Maclaurin formula, the harmonic series is approximated by:

\(H_n \approx \ln n+\gamma\) where \(\gamma \approx 0.5772\)

We need to reach \(H_n > 100\). Thus an approximation for the number of steps taken by the caterpiller is:

\[n \approx e^{H_n - \gamma} \approx 1.5 \times 10^{43}\]Chameleons

On a remote island there are population of rare chameleons, each one being either red, green or blue. If two different coloured chameleons meet then they will both change to the third colour. For example, if a red chameleons meet a blue on, they will both turn green. Initially their numbers are as follows:

- 13 red chameleons

- 15 green chameleons

- 17 blue chameleons

Is it ever possible for all the chameleons to become the same colour?

No, the chameleons can never all be the same colour.

Proof

Let \(c_B\) be the number of blue chameleons, \(c_G\) be the number of green chameleons, and \(c_R\) be the number of red chameleons.

Let’s look at the number of each colour modulo 3. For any arrangement of colours \(i\), \(j\) and \(k\) at any time \(t\) the number of chameleons, \(N_t\) is:

\[N_t \equiv \{ c_i, c_j, c_k\} \pmod 3\]Now let’s assume a chameleon of colour \(i\) and \(j\) meet, by our rules the new numbers are:

\[\begin{align} N_{t + \Delta t} & \equiv \{ c_i - 1, c_j - 1, c_k + 2\} \pmod 3 \\ & \equiv \{ c_i - 1, c_j - 1, c_k - 1\} \pmod 3 \end{align}\]Thus the difference between the numbers can never change (modulo 3).

Initially we have:

\[N_0 \equiv \{ c_R, c_G, c_B\} \equiv \{ 1, 0, 2\} \pmod 3\]For all the chameleons to be the same colour we would require the other two colours to both have 0 chameleons. However, from our initial condition this can never happen because none of the initial numbers are the same modulo 3.

Colliding ants

You have an large supply of ants. Each ant moves at 1 m/s. When two ants collide, they reverse directions.

Ants on a stick

Suppose you have a 1m long stick. On the stick are ants, randomly placed and facing left or right randomly. When an ant reaches either end end of the stick, it falls off.

Find the upper limit of the time it takes for the stick to be clear of ants, regardless of the original setup.

The stick will be clear of ants in, at most, 1 second.

Proof

When two ants reverse direction, it is equivalent to the two ants moving past each other because the identity of each ant doesn’t matter. Thus for the 1m long stick, all ants will have fallen off after 1 second.

Ants on a ring

Suppose you have a ring with a circumference of 1 m. There are 100 ants evenly spaced, 1 cm apart from each other on the ring. The ant are numbered in clockwise order so that #2 is just clockwise of #1, and #1 is just clockwise of #100, etc. At time \(t = 0\), all the prime-numbered ants (#2, #3, #5, … #97) start walking clockwise, while the other ants start walking counter-clockwise.

How far from its starting position is ant #1 at time \(t=1\) second?

Ant #1 and will have moved 50 cm counter-clockwise along ring (it will be on the opposite side of the ring).

Proof

As with the ants on a stick, two ants colliding is equivalent to them passing past each other if we don’t care about their identities. Thus at \(t=1\) there will be an ant on each of the position where there was \(t=0\), though this maybe a different ant to the original ant.

However, since the ants actually reverse direction when they collide, the relative order of the ants remain unchanged. Ant #1 remains between #2 and #100, ant #2 remains between #1 and #3, etc.

Since all ants end on one of the starting points at \(t=1\), ant #1 shifts counter-clockwise by \(x\) cm for some integer \(-100 \le x \le 100\). Because the relative order of the ants remains the same, and there is an ant on each of the original positions at \(t=1\), all ants must have shifted counter-clockwise by the same distance \(x\) cm.

At any point in time, 25 ant are moving clockwise and 75 ants are moving counter-clockwise as there are 25 prime numbers in the first 100 positive integers. This gives a total velocity of all the ants of 50 cm/s counter-clockwise for any time \(t\). Thus at time \(t=1\) the ants will all have moved counter-clockwise by 50 cm, meaning each individual ant will have moved counter-clockwise by 50 cm.

Everything equals 6

Make the following statements true by only adding mathematical operators:

\[\begin{align} 0 \quad 0 \quad 0 & = 6 \\ 1 \quad 1 \quad 1 & = 6 \\ 2 \quad 2 \quad 2 & = 6 \\ 3 \quad 3 \quad 3 & = 6 \\ 4 \quad 4 \quad 4 & = 6 \\ 5 \quad 5 \quad 5 & = 6 \\ 6 \quad 6 \quad 6 & = 6 \\ 7 \quad 7 \quad 7 & = 6 \\ 8 \quad 8 \quad 8 & = 6 \\ 9 \quad 9 \quad 9 & = 6 \end{align}\]For example \(2 + 2 + 2 = 6\).

\[\begin{align} (0! + 0! + 0!)! & = 6 \\ (1 + 1 + 1)! & = 6 \\ 2 + 2 + 2 & = 6 \\ 3 \times 3 - 3 & = 6 \\ 4 + 4 - \sqrt{4} & = 6 \\ 5 + 5 / 5 & = 6 \\ 6 + 6 - 6 & = 6 \\ 7 - 7 / 7 & = 6 \\ \left(\sqrt{8 + 8 / 8}\right)! & = 6 \\ (9 + 9) / \sqrt{9} & = 6 \end{align}\]

An interesting thing to note is that if we had four numbers instead of three then for any \(x \ne 0\):

\[\left((x + x + x) / x\right)! = 6\]Factorial zeros

How many zeros are there in 100!?

100! is 100 factorial, which is all the numbers up to 100 multiplied togther.

100! has 24 zeros.

Proof

The number of zeros at the end of a number tells us how many times 10 divides into the number. Each 10 that divides a number can be broken down into a 5 and a 2.

For factorial, factors of 2 are very common - every even number adds at least one 2. Thus for every 5, there will always be a 2 available. Therefore we only need to count how many times 5 goes into the factorial.

Every multiple of 5 introduces another 5 to the factorial’s factors. There are \(\left\lfloor \frac{n}{5} \right\rfloor\) 5s in the numbers up to \(n\). However, we also need to count an extra 5 for every multiple of \(5^2\), and so on for all powers of 5. We can only stop when the powers of 5 exceed \(n\).

Thus to calculate the number of times 5 divides \(n!\), and thus the number of zeros at the end, we have:

\[\sum_{i=1} \left\lfloor \frac{n}{5^i} \right\rfloor\]For \(n=100\):

\[\left\lfloor \frac{100}{5} \right\rfloor + \left\lfloor \frac{100}{25} \right\rfloor = 20+4 = 24\]Friends at a party

There is a party with a large number of people. Some people at the party are friends, some aren’t. All friendships are mutual.

Number of friends

Show that there are at least two people at the party who have the same number of friends.

Let \(n\) be the number of people at the party.

Since no one can be friends with themselves, everyone has between 0 and \(n-1\) friends.

If someone has \(n-1\) friends then that person is friends with everyone else, so no one can have 0 friends.

Likewise if someone has 0 friends, no one can have \(n-1\) friends (be friends with everyone).

Thus there are \(n-1\) possible numbers of friends and \(n\) people, so by the pigeon-hole principal, at least two people have the same number of friends.

Mutual friends

Now show that any group of six people contains either 3 mutual friends or 3 mutual strangers.

Imagine the 6 people. Of them, some person \(X\) either has at least 3 friends among the other 5, or at least 3 strangers.

If \(X\) has at least three friends, then either:

- The 3 of them are all mutual strangers.

- At least 2 of them are friends. Then the these 2 and \(X\) are all mutual friends.

Both of these satisfy the condition.

If \(X\) has at least three strangers, then either:

- The 3 of them are all mutual friends.

- At least 2 of them are strangers. Then the these 2 and \(X\) are all mutual strangers.

Both of these also satisfy the condition.

General case

In general, if we require there be either a group of \(a\) mutual strangers or \(b\) mutual friends then the minimum sized group of people required is called the Ramsey number: \(R(a,b)\). The case explored in this problem is \(R(3,3) = 6\).

Fuel circuit

\(N\) fuel canisters are placed along a race track. In total they contain \(L\) liters of fuel.

Suppose a race car needs \(L\) liters of fuel to go around the race track once. The cars fuel milage only depends on the distance travelled. The car can hold more than \(L\) liters of fuel.

Prove that there exists at least point on the cicuit from which the car can complete a full lap, starting with an empty tank of fuel.

Given \(N\) canisters, there is at least one canister with enough fuel to reach the next canister. Otherwise the total fuel in the canisters would not be sufficient to make it around the circuit.

Combine the next canister with the current canister at the location of the current canister, such that there are now \(N-1\) canisters. The total fuel is still \(L\). If the problem can be solved with these \(N-1\) canisters, the original problem can be solved since we’ve established that there was enough fuel to reach the next canister.

Now the same reasoning can be used to reduce the problem down to 1 canister which contains all \(L\) liters of fuel, at which point the problem is trivial.

Choosing this as the starting point allows us to solve the original problem.

Hidden ages

Alice is telling Bob about her children. Alice says she has three daughters and that the sum of their ages is the number of the house across the street.

Bob asks what their ages are.

Instead of answering directly, Alice tells Bob that the product of their ages is 36.

Bob says that this is not enough information to determine their ages.

Alice agrees and adds that the oldest daughter has the beautiful blue eyes.

Bob now knows the ages of Alice’s daughters.

How old are Alice’s daughters?

The daughters are 2, 2 and 9 years old.

Proof

The possible ages that multiply to 36 are (along with their sums):

- 1, 1, 36 (38)

- 1, 2, 18 (21)

- 1, 3, 12 (16)

- 1, 4, 9 (14)

- 1, 6, 6 (13)

- 2, 2, 9 (13)

- 2, 3, 6 (11)

Bob knows the sum of the ages because he can see the house number. Since knowing the sum is not enough to determine the ages, the sum must be one of the sums that is not unique.

The only sum that is not unique is 13, which is the sum of (1, 6, 6) and also (2, 2, 9).

Next Alice tells Bob about her oldest daughter. This means that the ages cannot be (1, 6, 6), since there is no oldest daughter in this case.

Hence the ages are 2, 2 and 9.

Hidden polynomial

I choose a polynomial with non-negative integer coefficients. You don’t know how many coefficients are in the polynomial. Your goals is to find out what my polynomial is. You are only allowed to ask me to evaluate the polynomial at integer points.

What is the fewest number of queries you can ask to determine my polynomial?

Only 2 queries are required.

Proof

Let the polynomial you are trying to guess be \(p(x) = \sum c_i x^i\).

First, attempt to determine \(p(x)\) with only one query: \(a\). This would not allow us to distinguish between the polynomials \(p_1(x) = x\) and \(p_2(x) = a\) - both would evaluate to \(a\).

To solve the problem with two queries, first ask for \(p(1)\):

\[r_1 = p(1) = \sum c_i\]Let \(b=r_1+1\). Since the coefficients of \(p(x)\) are non-negative, \(b\) is greater than all \(c_i\).

Next evaluate \(p(b)\):

\(r_2 = p(b) = \sum c_i b^i\).

The coefficients of the polynomial can be read off as the digits of \(r_2\) when it is written in base \(b\).

Hidden vector

In a very similar problem I have a hidden vector of \(n\) non-negative integers \([x_1, x_2, \ldots, x_n]\). Your goal is to find out my vector. You are allowed to name any vector \([y_1, y_2, \ldots, y_n]\) of \(n\) non-negative integers, and I’ll tell you the dot product of your vector and my vector.

Clearly by naming the \(n\) vectors \([1, 0, \ldots, 0], [0, 1, \ldots, 0], \ldots, [0, 0, \ldots, 1]\) you can find my vector.

What is the fewest number of vectors required for you to be sure of my vector?

Only 2 vectors are required.

Proof

Let the vector you are trying to guess be \(\mathbf{x} = [x_1, x_2, \ldots, x_n]\).

First, attempt to determine \(\mathbf{x}\) with only one query, \(\mathbf{y} = [y_1, y_2, \ldots, y_n]\). Assume \(n > 1\). All entries in \(\mathbf{y}\) must be non-zero. If some value \(y_i\) was zero, then we would have no information about entry \(x_i\). However, now we cannot tell the difference between the vectors \(\mathbf{x} = [y_2, 0, x_3, x_4, \ldots, x_n]\) and \(\mathbf{x} = [0, y_1, x_3, x_4, \ldots, x_n]\). Both result in the dot product of \(\mathbf{x} \cdot \mathbf{y} = y_1 y_2 + \sum_{i=3}^n y_i x_i\).

Thus we must use at least 2 vectors, \(\mathbf{y}_1\) and \(\mathbf{y}_2\). Let \(\mathbf{y}_1 = [1, 1, \ldots, 1]\). This will result in the value \(r_1\) which is the sum of all values in \(\mathbf{x}\):

\[r_1 = \mathbf{x} \cdot \mathbf{y_1} = \sum_{i=1}^n x_i\]Let \(b = r_1+1\). Since the values in \(\mathbf{x}\) are non-negative, \(b\) is greater than all \(x_i\).

Now construct our second vector \(\mathbf{y}_1 = [1, b, b^2, b^3, \ldots, b^{n-1}]\). Our second result, \(r_2\) will be:

\[r_2 = \mathbf{x} \cdot \mathbf{y_2} = \sum_{i=0}^{n-1} x_{i+1} b^i\]The numbers \(x_i\) can be read as the digits of \(r_2\) when it is written in base \(b\).

Open lockers

A school has a hallway with 100 lockers. All the lockers are closed.

A student walks down the hallway can opens every locker. A second student walks down the hallways and closes every second locker, starting at locker 2. A third student then walks down the hallways and changes the state of every third locker, starting at locker 3.

The \(n\)th student walking down the hallway changes the state of every \(n\)th locker starting at locker \(n\).

After 100 students have passed down the hallway, which lockers are open?

The lockers left open are the square numbers: 1, 4, 9, 16, 25, 36, 49, 64, 81 and 100.

Proof

The lockers that are left open are those that have been changed an odd number of times.

Since student \(n\) changes all the lockers that have \(n\) as a factor, the lockers that have changed are those that have an odd number of factors.

Factors come in pairs (\(n = f_1 \times f_2\)), so for there to be an odd number of factors, a factor \(f\) must repeat itself in a pair (\(f = f_1 = f_2\)). In this case \(n = f \times f\), thus \(n\) is a square number.

Monkey and the coconuts

Five sailors were shipwrecked on an island with coconuts trees and a monkey. They spent the first day gathering coconuts. During the night, one sailor woke up and decided to take his share of the coconuts. He divided them into five piles. One coconut was left over so he gave it to the monkey, then hid his share, put the rest back together, and went back to sleep.

Soon a second sailor woke up and did the same thing. After dividing the coconuts into five piles, one coconut was left over which he gave to the monkey. He then hid his share, put the rest back together, and went back to bed. The third, fourth, and fifth sailor followed exactly the same procedure. The next morning, after they all woke up, they divided the remaining coconuts into five equal shares. This time no coconuts were left over.

How many coconuts were there in the original pile?

3121 coconuts in the minimum size of the original pile.

Proof

Each time a sailor visits the pile, one coconut is lost (to the monkey) and the size of the pile is reduced by \(\frac{1}{5}\)th. Thus the size of the pile goes from \(N \rightarrow \frac{4}{5}(N - 1)\).

Iterating this formula gets unwieldy, so we first rewrite it as the following equivalent formula: \(N \rightarrow \frac{4}{5}(N + 4) - 4\).

Now iterating the formula 5 times (once for each sailor) gives the following sequence:

\[\begin{align} N & \rightarrow \frac{4}{5}(N + 4) - 4 \\ & \rightarrow \frac{16}{25}(N + 4) - 4 \\ & \rightarrow \frac{64}{125}(N + 4) - 4 \\ & \rightarrow \frac{256}{625}(N + 4) - 4 \\ & \rightarrow \frac{1024}{3125}(N + 4) - 4 \end{align}\]Since that number of coconuts in the final pile must be an integer and 1024 is relatively prime to 3125, \(N+4\) must be a multiple of 3125. The smallest such multiple is 3125 \(\times\) 1, so \(N\) = 3125 – 4 = 3121. The number left in the morning comes to 1020, which is evenly divisible by 5 as required.

See Monkey and the coconuts on Wikipedia for other proofs and variations of the problem.

Hiking in the mountains

At 6 am you start hiking on a path up a mountain. You walk at a variable pace, resting occasionally. At 6 pm that day you reach the top of the mountain. You camp out overnight.

The next morning you wake up at 6 am and start your descent down the mountain. Again you walk down the path at a variable pace, resting occasionally. The downhill hike is easier, but you are tired after yesterday and at 6 pm you reach the bottom.

Is it possible to find some time during the second day, such that you are at the exact same spot you were on at the same time on the first day?

You can always find a spot such that you were there at the same time on both the first and the second day.

Proof

Imagine a second hiker, who starts climbing the same path at 6 am on the second day. Now assume that the second hiker walks at exactly the same speed you did on the first, and takes the same breaks you did.

Now on the second day, since you both start at opposite ends of the path at 6 am and finish at opposite ends at 6 pm, you must have met each other along the way. The point where you meet gives a point where you were at the same position at a given time on both days.

Negating function

Design a function \(f: \mathbb{Z} \rightarrow \mathbb{Z}\) such that \(f(f(n)) = -n\).

An example is:

\[f(n) = \operatorname{sgn}(n) - n(-1)^n\]Proof

Suppose that \(f(x) = y\) for some \(x\) and \(y\). Then:

\[\begin{align} f(y) = & f(f(x)) = -x \\ f(-x) = & f(f(y)) = -y \\ f(-y) = & f(f(-x)) = x \\ \end{align}\]Therefore continued application of \(f\) results in a cycle: \(x \rightarrow y \rightarrow -x \rightarrow -y \rightarrow x\).

This implies that \(f(0) = 0\) because we require that \(x = f(0) = f(-0) = -x\).

For all other integers we need to partition them into groups of 4, each which contain some \(x\), \(y\) and their negations. To construct such a mapping, let us make the simplest choice of letting \(f(1) = 2\). This then forces the following cycle: \(1 \rightarrow 2 \rightarrow -1 \rightarrow -2 \rightarrow 1\).

Likewise we can let \(f(3) = 4\) and in general for \(k > 0\) we can let \(f(2k-1) = f(2k)\) which forces: \(2k-1 \rightarrow 2k \rightarrow -2k-1 \rightarrow -2k \rightarrow 2k-1\). Thus we can define how the function acts on a number based on whether it is positive or negative and whether it is even or odd:

\[f(n) = \begin{cases} n + 1 & \text{ if } n > 0 \wedge n \text{ is odd} \\ -n + 1 & \text{ if } n > 0 \wedge n \text{ is even} \\ n - 1 & \text{ if } n < 0 \wedge n \text{ is odd} \\ -n - 1 & \text{ if } n < 0 \wedge n \text{ is even} \end{cases}\]When \(n < 0\) we are always adding 1, and when \(n > 0\) we are always subtracting \(1\). Thus the above simplifies to:

\[f(n) = \begin{cases} \operatorname{sgn}(n) + n & \text{ if } n \text{ is odd} \\ \operatorname{sgn}(n) - n & \text{ if } n \text{ is even} \end{cases}\]Now \((-1)^n = 1\) when \(n\) is even, and \((-1)^n = -1\) when \(n\) is odd. This allows us to simplify the above to our solution: \(f(n) = \operatorname{sgn}(n) - n(-1)^n\)

By construction it satisfies \(f(f(n)) = -n\) for \(n \ne 0\), and we can evaluate \(f(0)\) to check that it indeed holds for \(0\) as well. Thus our solution is correct.

Burning fuses

You have a lighter and two fuses. Each fuse takes an hour to burn, but they don’t burn at a steady rate throughout the hour.

How do you use the two fuses to create a 45 minute timer?

Light both ends of the first fuse and one end of the second fuse. When the first fuse has completely burnt, light the other end of the second fuse. When the second fuse has completely burnt, 45 minutes has passed.

Proof

When both ends of a fuse are lit, the fuse will burn in half the time. This is true however unevenly the fuse burns.

The first fuse will burn out completely in 30 minutes. At that time the second fuse is now has 30 minutes remaining.